As large language models (LLMs) like GPT-4o, Claude 3, Gemini 1.5, and Perplexity increasingly influence search behavior, a growing chorus of marketers is prematurely declaring the death of traditional SEO ranking factors, backlinks chief among them.

This is fundamentally incorrect.

While the search landscape is rapidly evolving, the underlying mathematics and data structures that inform LLM training—and search engine retrieval—still heavily weight backlinks as core signals for authority, trust, and semantic association.

The signal may be filtered differently, but it’s far from irrelevant.

Let’s dissect exactly why backlinks still matter for LLMs — and why link building remains a first-order growth lever for organic visibility in the AI-native search era.

Contents

- The Evolution of Search Indexing & LLM Architecture

- Links as Proxies for Semantic Authority & Trust

- Co-Citation, Co-Occurrence, and the Emergent Link Graph

- Entity-Based Retrieval: Where Links Inform LLM Query Processing

- The Long-Term Compounding of Link Equity in an LLM World

- The Real Future: AI-Native Link Building

- Links as Structural DNA for AI Search

The Evolution of Search Indexing & LLM Architecture

Traditional search engines like Google originally leveraged link analysis via PageRank to calculate authority across the web graph.

Over time, this expanded to include hundreds of ranking factors: content relevance, user signals, topical authority, and entity recognition.

Enter transformer-based models (LLMs), which don’t rank pages in the classical sense but instead generate answers based on probabilistic token prediction across vectorized representations of language.

However, most LLMs ingest their training data directly from the web — which itself is built upon the hyperlink graph.

Ingestion Sources Still Depend on Links:

-

Crawl prioritization: Heavily linked pages get crawled more frequently and indexed more reliably.

-

Document authority weighting: Highly linked sources are given greater sampling weight in training corpora.

-

Entity grounding: Links connect content to entities, helping LLMs resolve ambiguity and generate accurate outputs.

The LLM may not directly “count” links during inference, but its entire representation of knowledge was trained on link-weighted web content.

Links as Proxies for Semantic Authority & Trust

Modern LLMs are tasked not only with linguistic fluency but factual grounding. This introduces significant risk of hallucination, which model developers actively work to mitigate via:

-

Curation of high-authority datasets (e.g. Common Crawl + link-filtered corpora)

-

Reinforcement Learning from Human Feedback (RLHF) that favors trusted, well-cited domains

-

Retrieval-Augmented Generation (RAG) systems that pull real-time data from indexed knowledge bases (Google SGE/AI-Mode, Perplexity, You.com, etc.)

The common denominator? Linked data.

Heavily linked pages disproportionately populate these systems because:

-

They reflect collective human trust (crowd-sourced validation)

-

They correlate with professional editorial standards

-

They assist in disambiguating entities for better semantic mapping

Co-Citation, Co-Occurrence, and the Emergent Link Graph

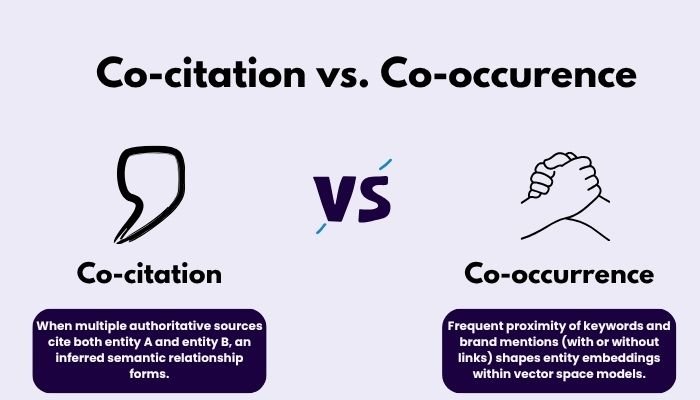

Even when explicit link signals are absent, implicit link structures still inform LLM representations via co-citation and co-occurrence:

-

Co-citation: When multiple authoritative sources cite both entity A and entity B, an inferred semantic relationship forms.

-

Co-occurrence: Frequent proximity of keywords and brand mentions (with or without links) shapes entity embeddings within vector space models.

However, raw link signals accelerate and strengthen these relationships. They reduce ambiguity and improve the model’s confidence in mapping entities to authoritative data points.

In short: links scaffold the semantic web that LLMs operationalize.

Entity-Based Retrieval: Where Links Inform LLM Query Processing

Google’s AI-powered Search Generative Experience (SGE) and other retrieval-augmented LLMs increasingly function as entity-based retrieval systems. Links still serve several core roles here:

-

Establishing canonical entities: Strong inbound link profiles help resolve which site/entity is authoritative for ambiguous queries.

-

Reinforcing topical clusters: High-quality backlinks build topical hubs that improve knowledge graph confidence.

-

Citation in AI outputs: Many AI systems still provide source citations based on link graph prominence and retrieval confidence (e.g. Perplexity, Bing Copilot).

If your site is heavily linked by authoritative domains within your niche, you’re exponentially more likely to be cited — both directly and via vector-space embeddings — in LLM answers.

The Long-Term Compounding of Link Equity in an LLM World

Because LLMs update their knowledge bases in epochs (periodic model retraining), link acquisition isn’t just about short-term rankings. It compounds:

-

Heavily linked content is crawled, archived, and incorporated into LLM datasets.

-

Entity embeddings are influenced by persistent link-based relationships.

-

Citations across models snowball via recursive training data reinforcement.

This creates a feedback loop where early link building compounds model visibility for months or even years across multiple AI systems.

The Real Future: AI-Native Link Building

Going forward, link building remains mission-critical—but the tactics are evolving. Successful AI-native link strategies will emphasize:

-

Entity-first link building: Building links that reinforce your brand as a recognized semantic entity.

-

Topical trust signals: Securing links from highly relevant, narrowly defined topical authorities.

-

AI-optimized content hubs: Creating comprehensive, linkable assets that LLMs readily ingest and reference.

-

Brand citation optimization: Encouraging high-quality unlinked brand mentions alongside explicit backlinks to shape co-occurrence signals.

Links as Structural DNA for AI Search

In both classical SEO and AI-native search, backlinks function as foundational scaffolding for knowledge discovery.

They remain central to how information is weighted, ranked, and retrieved—whether by a PageRank-driven crawler or a trillion-parameter language model.

Those who ignore link building in the LLM era risk not only organic invisibility but eventual exclusion from the datasets that will define the next generation of AI-powered discovery.

Need help building AI-proof link authority?

That’s exactly what we do at Link.Build.

Modern, ethical, entity-focused link building that works for both today’s Google and tomorrow’s AI.

Nate holds a bachelor's in business management from Brigham Young University and a MBA in Finance from the University of Washington. He resides in Northwest Arkansas with his wife and four children. Connect with Nate on Linkedin.

- Why Backlinks Still Matter for LLMs - June 6, 2025

- Why Marketers & SEOs Should Be Building Fewer Links - December 31, 2022